(click here for introduction to Fooled By A Red Herring)

Induction is often contrasted with deduction. Both are types of reasoning. Deduction starts with general statements (e.g. all humans are mortal) and draws logical conclusions from them (e.g. X is human, therefore X is mortal). In its purest form, deduction follows a rigorous definition-theorem-proof format; that’s why mathematics and formal logic are said to be deductive sciences.

Induction on the other hand, consists in inferring general conclusions from particular observations, hence, it’s typically associated with the empirical sciences; from physics to biology to psychology and economics, which try to find patterns in observations and measurements. Empirical theories are assessed not only on their logical consistency, but also on their agreement with observable data.

An example of inductive reasoning is the one that inspired Taleb: All swans hitherto observed were white, therefore, all swans are white. The Black Swan is a textbook example of the (philosophical) problem of induction: No amount of data is ever sufficient to serve as ultimate proof for a general statement. The fact that you’ve seen many white swans but never a black one may strengthen your belief that there are no black swans, but a single black specimen is sufficient to overthrow it. Swans were long believed to be white without exception, but the generalized statement was refuted when black swans were discovered in Australia.

In my opinion, the anecdote is at best a further illustration of the difference between deduction and induction (a deductive inference, when valid, does not require empirical proof), but it’s got very little to do with how inductive reasoning is applied in science. A scientist doesn’t sit by a pond all day long, keeping a tally of swans swimming by, and then go home to write a paper with the title “An Empirical Proof for the Whiteness of Swans”.

The experiments and observational studies in science require a clever combination of deduction and induction; setting up hypothesis, collecting and analyzing data, putting the hypotheses to rigorous tests, and trying to rule out alternative explanations before drawing conclusions. Of course, as you repeat experiments, there’s no guarantee that they consistently show the same result. But unlike the single black swan that refutes a general conclusion supported by millions of observations, more interesting scientific facts are not tossed out of the window after a single counterexample.

One reason is that in real-life experiments, what is actually tested is not only the hypothesis the scientists are attempting to prove (or disprove), but also a number of tacit assumptions they bring to the experiment. By the way, that’s also an important criticism against philosopher Karl Popper’s criterion of falsifiability. According to Popper, scientists should try to falsify theories, rather than verify them, because one counterexample is sufficient for a falsification, but no amount of confirmatory evidence is sufficient for a complete verification. True, scientists ought to devise ‘risky’ experiments; that is, experiments that may prove them wrong. But to assume that a single counterexample is sufficient to settle a scientific question is a very naïve view of how science progresses.

A nice example of the complexities in scientific tests are the September 2011 reports from the OPERA experiment. The experimenters involved in the project shocked the physics community with their announcement they had discovered particles traveling faster than light. Following Karl Popper’s dictum, that single experiment should have been enough to abandon Einstein’s theory of relativity[1], one of the best theories presently available, rigorously tested and supported by decades of experimental and observational evidence. Needless to say, physicists not involved in the experiment were skeptical. Many other explanations could account for the anomaly. Elementary particle experiments rely on highly sensitive, highly sophisticated measurement apparatuses and equipment, requiring years of preparation. The accepted margin of error in the equipment’s functioning is extremely small. Furthermore, the speed of a particle is not something you can read off a speedometer. It has to be calculated by subjecting an enormous volume of data to meticulous statistical analyses. Calculation errors are easily made, even though the risk in this case was mitigated by the fact that the scientists took great care in scrutinizing and double-checking their results.

As it turned out, the explanation for the anomaly was a problem with the equipment: a fiber optic cable that was improperly attached, and a malfunctioning clock oscillator. What was refuted was not Einstein’s theory of relativity, but the auxiliary assumption that all of the equipment worked as designed (other auxiliary assumptions are that the calculations are correct, that the experimental design is correct, that there are no unknown external influences etc.).

In the human sciences (epidemiology, psychology, sociology, finance,…) many hypotheses are expressed as statistical laws. For example, the odds of getting lung cancer are 20 times higher if you are a smoker than if you are a non-smoker. That’s a statistical law. And if someone wants to disconfirm it, they have to come up with a little bit more counterevidence than the skeptical smart-ass “whose aunt smoked 20 cigarettes per day, 70 years long, and died of old age at 90”. One healthy swallow does not make a summer, and it’s not a black swan either.

To prove or disprove a statistical law, you need large data sets (and preferably also a strong background in statistics, so that you know how to avoid Type-1 and Type-2 errors). An example of skeptical smart-asses in finance are some critics of the Efficient-Market Hypothesis (EMH), who are quick to announce the demise of the EMH every time some stock they’ve been following takes a nosedive. For another example, read my review of Emanuel Derman’s “Models. Behaving. Badly”, in which professor Derman fails to recognize the statistical nature of another famous theory in finance, the CAPM (Capital Asset Pricing Model).

But the thing that’s most misleading about the “problem” of induction is that it suggests a black-and-white criterion of evidence: either the evidence is conclusive, in which case we no longer need any further proof, or else the evidence is inconclusive, in which case no conclusion can be drawn at all. Fair enough, no amount of empirical data, however voluminous, can attest to the truth of a theory with absolute certainty. But you don’t dismiss the (well-proven) laws of thermodynamics every time a crackpot claims he’s invented a perpetual motion device (Extraordinary claims require extraordinary evidence, as Carl Sagan once said famously). Most importantly, acknowledging that all scientific knowledge is provisional and subject to falsification does not imply all scientific theories are equal. The continuing collection of evidence, not just by a repetition of the same experiments, but evidence coming from widely different angles, and the integration of scientific results into more general, already accepted theories, enable theories to evolve from “just a theory” (without evidence) to theories so strong we can rely on them being true with close to 100% certainty.

Between 0% and 100% lies a wide range of intermediate probabilities of a theory or statement being true[2]. As soon as you realize that, the problem of induction evaporates. You don’t have to bet your life on all swans being white just because nobody has ever observed a black one. But at least you can draw a tentative conclusion, for example that “in areas where many people live; and large, strange animals are unlikely to remain unobserved for a very long time, we’re unlikely to find black swans”. Science is so much subtler than the simplistic versions some philosophers tend to make of it.

In the philosophy of science, the black swan is often used as an argument against a position known as naïve inductivism, the naïve belief that knowledge about the world can be attained by simple induction as with the white swan example. I doubt there are any scientists (past or present) who hold such a position. Thus the Black Swan argument, when used as an attack on science, is a typical straw-man argument, supposedly pointing out the limitations of science. But it gets worse. The argument is also used to support a hopelessly impractical philosophy known as radical skepticism. As I explained in an earlier post, a radical skeptic doesn’t merely reject this or that assertion, she rejects the possibility of knowing anything at all, on the grounds that no evidence can ever be sufficient.

Taleb leans dangerously close towards that position when he complains about the infinite regress a statistician faces: “You don’t know if your sample size is insufficient unless you already know the probability distribution. But you can’t know the probability distribution unless you have sufficient data.”[3] He’s right, strictly speaking, just as he’s right that you can’t be sure all swans are white after observing a million positive instances. But it doesn’t follow that you are completely in the dark, no matter how many observations you’ve made. The way out of the infinite regress is to acknowledge that no, you can never be 100% sure you have sufficient data, but as you increase the sample size, the probability you have enough observations to draw conclusions gradually increases, especially if you try hard to find negative evidence rather than positive evidence.

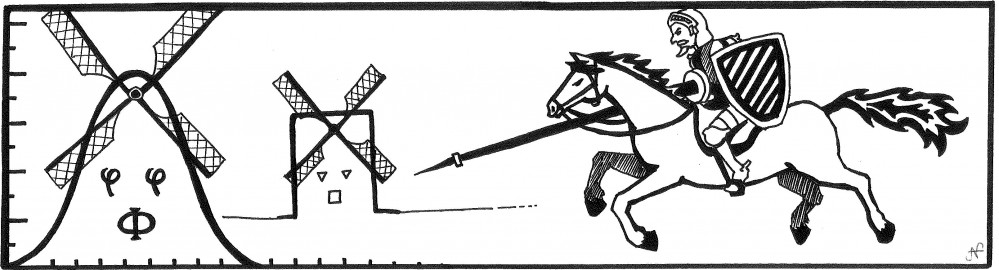

Getting back to Taleb’s choice of the Black Swan metaphor: he recognizes himself that the philosophical Black Swan is only a weak metaphor for the highly improbable event in finance. Because, unlike a major stock market crash wiping out billions of dollars, the discovery of black swans in Australia did not have dramatic consequences. Still, in the following chapters I will argue that there is a deep similarity between the Black Swan as in the problem of induction and its counterpart in finance: both are extremely superficial creatures, only relevant in discussions if you insist on reducing science, with all its intricacies and subtleties, to an absurdly simplistic straw man image. In the end, the Black Swan is nothing but a Red Herring, an irrelevant concept that distracts us from the real issues confronting traders, risk managers, senior managers and academics. Regardless, with the combativeness and tenacity of Don Quixote, Taleb continues to fight until the last windmill has disappeared from the face of the earth.

Chapter summary: The sun will rise tomorrow, and let no eccentric philosopher tell you otherwise.

[1] Strictly speaking, the theory of relativity does not forbid faster-than-light speed; it only forbids accelerations from a subluminal speed to a superluminal speed.

[2] Assigning probabilities to a hypothesis is fundamental to Bayesian statistics. But even in the classical Neyman-Pearson framework, where a hypothesis is either rejected or not rejected, the binary nature of a rejection is attenuated by the recognition of the possibility of type I and type II errors, and consequently, by the requirement that results be confirmed by independent replications of the experiment.

[3] Nassim N. Taleb: Black Swans and the Domains of Statistics, The American Statistician, August 2007